Validation of EO Products

(EO4GEO - Faculty of Geodesy University of Zagreb)Definitions and Their Source for Some Terms Commonly Used in EO Validation

|

Term |

Definition |

Source |

|

Verification |

Confirmation, through the provision of objective evidence, that specified requirements have been fulfilled |

ISO:9000 |

|

Validation |

(1) The process of assessing, by independent means, the quality of the data products derived from the system outputs (2) Verification, where the specified requirements are adequate for an intended use |

CEOS/ISO:19159 VIM/ISO:99 |

|

Evaluation |

The process of judging something’s quality |

The Cambridge Dictionary |

|

Quality |

Degree to which a set of inherent characteristics of an object fulfills requirements |

ISO:9000 |

|

Quality indicator |

A means of providing a user of data or derived product with sufficient information to assess its suitability for a particular application |

QA4EO |

|

Accuracy |

Closeness of agreement between a measured quantity value and a true quantity value of a measurand. Note that it is not a quantity and it is not given a numerical quantity value |

VIM/ISO:99, GUM |

|

Measurement error |

Measured quantity value minus a reference quantity value difference of quantity value obtained by measurement and true value of the measurand |

VIM def. 2.16 CEOS/ISO:19159 |

|

Measurement uncertainty |

Nonnegative parameter characterizing the dispersion of the quantity values being attributed to a measurand |

VIM def. 2.26 |

Validation in Earth Observation (Remote Sensing)

- Validation refers to the process of assessing the uncertainty of higher level, satellite sensor derived products by analytical comparison to reference data, which is presumed to represent the true value of an attribute.

- Validation of the satellite data itself (images and other directly measured values) is also performed.

- Validation is an essential component of any Earth observation program

- It enables the independent verification of the physical measurements obtained by a sensor as well as any derived products.

Validation Framework

- Validation and uncertainty assessment - crucial requirement of a satellite data product (from the end user perspective).

- The term validation - mean many things.

- The geometric aspects of satellite images are validated.

- The radiometric aspects of satellite images are validated.

- The results of satellite image processing (spectral indices, extraction of individual objects, classification, LST, ...) can also be validated.

- Validation of land products - a procedure for assessing the accuracy of satellite derivatives and quantifying their uncertainty by analytical comparison with reference data.

Verification in Earth Observation (Remote Sensing)

- Verification - here means confirmation (through the provision of objective evidence) that requirements have been fulfilled.

- Validation is a specific case of verification, which takes into account the intended use of the data products.

Reference Measurements

- A key component of any validation is to check the consistency (comparison) of remote sensing data with reference measurements

- Reference measurements are assumed to represent the truth (at least within their own uncertainties).

- The EO and reference data are (in an ideal case) both linked to a stated metrological reference through an unbroken chain of calibrations or comparisons.

- In practice, reference data can range from fully SI (Système International) traceable to loosely agreed community standards.

- Ground measurements - fully characterized and traceable are often called Fiducial Reference Measurements (FRM).

Reference Measurements: 2

- In practice, EO data are rarely fully traceable.

- Basic calibrations were performed under laboratory conditions can’t repeat in Space.

- Therefore - a comparison with a reference measurements in a validation exercise are often the only way to validate EO data back with an agreed standard.

“error” and “uncertainty”

- What exactly is meant by measurement uncertainty?

- The terms "error" and "uncertainty" are often used interchangeably in the scientific community.

- In metrology, measurement uncertainty is the expression of the statistical dispersion of the values attributed to a measured quantity.

- The measurement error - the difference between the measured value and the true value.

- The measurement error can contain both a random and a systematic component.

Uncertainty in Systems and Reference Data

- Uncertainties in the reference and EO measurements are derived from a consideration of the calibration chain in each system and the statistical properties of outputs of the measurement system.

- The Guide to the expression of Uncertainty in Measurement (GUM) [JCGM, 2008, 2009, 2011] prescribes how these component uncertainties are combined to give an overall uncertainty associated with measurements from each system, denoted in the current paper with ux and uy.

Joint Committee for Guides in Metrology (JCGM) (2008), Evaluation of measurement data—Guide to the expression of uncertainty in measurement, Tech. Rep., BIPM, Sèvres, France. (https://www.bipm.org/utils/common/documents/jcgm/JCGM_100_2008_E.pdf)

Joint Committee for Guides in Metrology (JCGM) (2009), Evaluation of measurement data—An introduction to the ‘Guide to the expression of uncertainty in measurement’ and related documents, Tech. Rep., BIPM, Sèvres, France. (https://www.bipm.org/utils/common/documents/jcgm/JCGM_104_2009_E.pdf)

Joint Committee for Guides in Metrology (JCGM) (2011), Evaluation of measurement data—Supplement 2 to the “Guide to the expression of uncertainty in measurement”: Extension to any number of output quantities, Tech. Rep., BIPM, Sèvres, France. (https://www.bipm.org/utils/common/documents/jcgm/JCGM_102_2011_E.pdf)

Uncertainty due to Imperfect Spatiotemporal Collocation

- In the validation process, this uncertainty must be taken into account in the consistency check.

- This uncertainty is not related to an individual measurement

- It’s related to the differences in sampling and smoothing properties of different measurement systems.

The Validation Process

- Validation aim is in principle straightforward

- The actual implementation represents an extensive process

- each individual step is subject to various assumptions and

- potentially requires user decisions which might make it a subjective approach.

- Within most communities, detailed validation protocols have been established, tailored to the specific products and validation aims.

Uncertainty in Systems and Reference Data

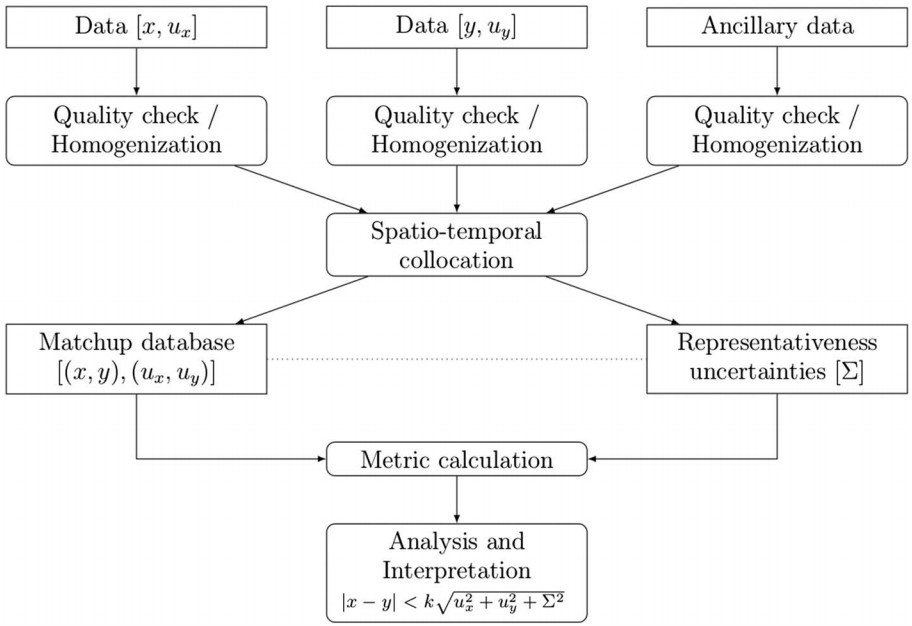

Schematic overview of the general validation process

(https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017RG000562

The Validation Process - Data

- In ideal perspective the input data x and y (e.g., satellite data and reference data) to the validation process would be traceable to SI reference standards.

- In practice this is rarely the case

- The choice of reference data is often a pragmatic decision.

The Validation Process - Data 2

- These decisions, therefore, affect the overall credibility of the validation results.

- Typical considerations in this regard include the following questions:

- (1) Do the data provide scientifically meaningful estimates of the investigated geophysical quantity?

- (2) Do these data sufficiently cover the potential parameter space?

- (3) Are the data expected to be accurate enough to be able to draw desired conclusions from the validation process?

- (4) Are the data publicly available and accessible?

The Validation Process - Quality Checking

- Many data providers include information on data quality within their data sets.

- Data quality information can be provided as simple binary flags (good/poor data quality) or gradated flags representing a number of different quality levels.

- Alternatively, data may be provided with quantitative uncertainty measures.

- In some cases, additional checks may be required, such as:

- checking the physical probability of a particular measurement,

- visually inspecting the data, or

- testing the time consistency.

The Validation Process - Spatiotemporal Collocation

The following concerns are to be addressed:

- Collocated measurements should be close to each other relative to the spatiotemporal scale on which the variability of the geophysical field becomes comparable to the measurement uncertainties.

- If possible, differences in spatiotemporal resolution (horizontal, vertical, and temporal) should be minimized.

- The collocation criteria should take into account the need for sufficient collocated pairs for robust statistical analysis. This need is often at odds with the first concern, and a compromise must be made

The Validation Process - Spatiotemporal Collocation 2

Broadly speaking, there are two categories of collocation methods

- Those that keep the data on their original grids and select the closest matches and

- Those that use interpolation and aggregation techniques (e.g., regridding, resampling, and kriging) to bring both data sets onto the same grid and temporal scale.

The Validation Process – Homogenization - Metric Calculation

- In many cases, further homogenization between the two data sets is necessary before actual differences can be computed.

- Homogenization (transformation) of radiometric and spatial resolutions between data sets.

- Units and other representation conversions are often required, and these can introduce additional uncertainties.

- The choice of metrics used within the validation process depends on the application and data available.

The Validation Process – Analysis and Interpretation

- Once the final metrics have been obtained, it needs to be judged if the results are compliant with the requirements.

- Following the definition of validation (see Slide 1), this implies verification that the data set is suitable for a specific application.

- In many cases a single application does not exist.

- Requirements may be numerous and, thus, validation targets would need to be defined

- Which could then be checked for compliance on an individual basis.

The Validation Process – Activities and Tools for Quality Assurance and EO Data Validation

- A couple of research projects have been devoted to establish best practices for the production of traceable, quality-assured EOdata products aswell as practices for their validation.

- The deliverables of these projects and international activities are not necessarily published in the peer reviewed literature but represent nevertheless a rich set of valuable information.

Some Projects and Activities Related to EO Data Validation and Available Resources

|

Project |

Description |

References |

|

IPWG |

Validation resources and server for precipitation data validation |

http://www.isac.cnr.it/∼ipwg/calval.html |

|

QA4ECV (FP7) |

Quality assurance for essential climate variables |

http://www.qa4ecv.eu; online traceability |

|

GAIA-CLIM (H2020) |

Improving nonsatellite atmospheric reference data, supporting their use in satellite |

http://www.gaia-clim.eu; The GAIA-CLIM Virtual Observatory; Deliverables D2.8, validation, and identifying gaps |

|

CEOS-LPV |

Protocols for variable-specific validation and coordination of validation across land product products |

https://lpvs.gsfc.nasa.gov/ |

|

ESA CCI |

CCI Project guidelines |

http://cci.esa.int/sites/default/files/ ESA_CCI_Project_Guidlines_V1.pdf |

|

GEWEX water vapor assessment |

Overview of satellite, in situ, and ground-based water vapor data records, an archive of global long-term water vapor data records on common grid and period for intercomparison and collocated radiosonde data from two different multistation long-term radiosonde archive |

http://gewex-vap.org/ |

|

RFA |

Overview of satellite and ground-based radiation data records on common grid for intercomparison |

https://gewex-rfa.larc.nasa.gov/ |

|

OLIVE |

Evaluation and cross comparison of land variables |

Weiss et al. [2014] http://calvalportal.ceos.org/web/olive |

These and some other projects can be found at: https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017RG000562

Mathematical Basis - Differences Between Data Sets

|

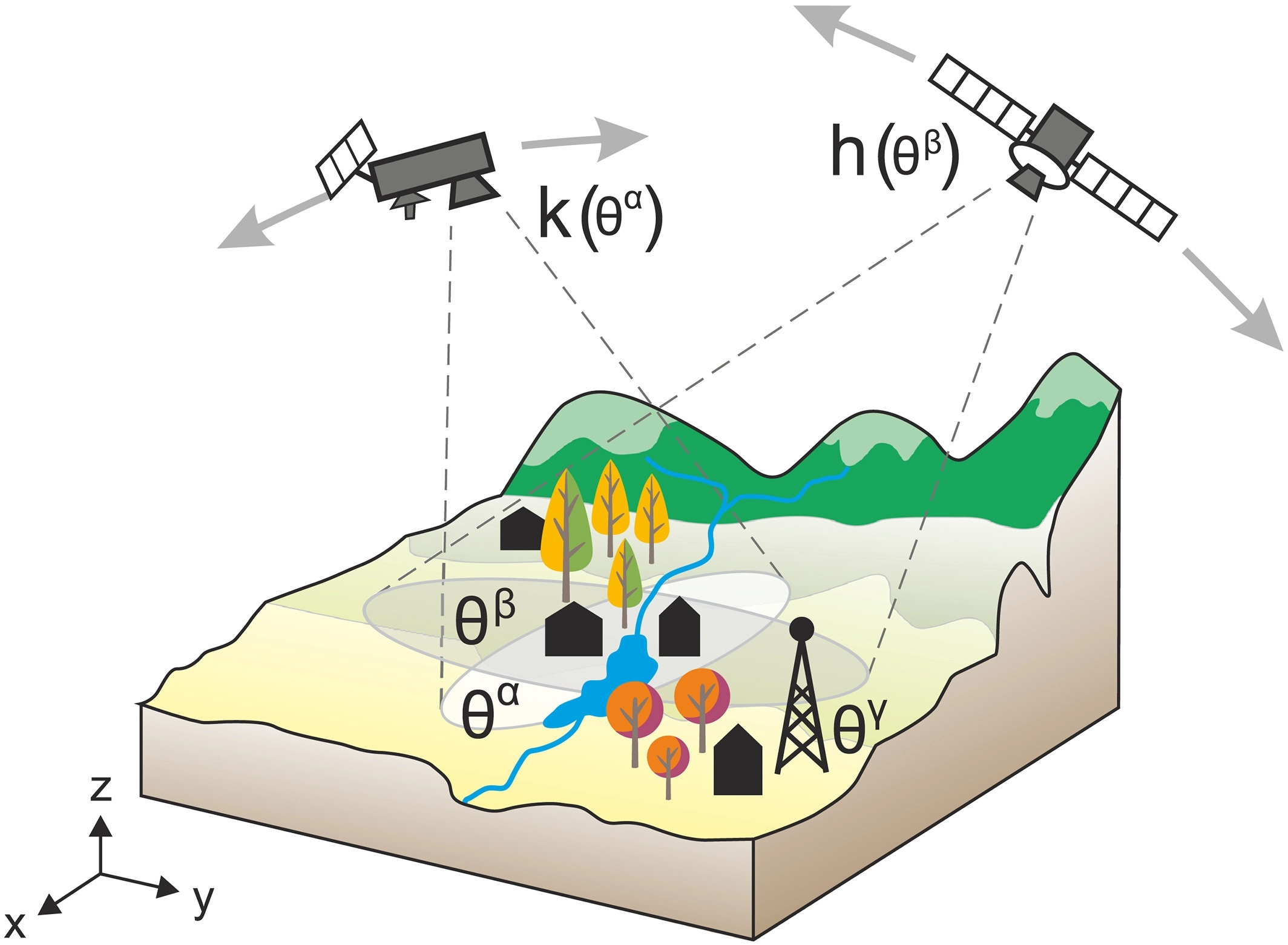

General sketch of the validation problem. A true, but unknown field of a geophysical variable 𝜃 is observed by different measurement systems on different spatial (and temporal) scales, denoted as 𝛼, 𝛽, and 𝛾, using nonlinear mapping functions (h, k). |

Mathematical Basis - Probability Density Functions

- For validation purposes, we are not primarily interested in the differences for each individual pair of observations, but the underlying probability density function (PDF) of 𝛿, f𝛿

- The uncertainty assessment requires detailed understanding of the individual contributions to the PDF, i.e.,

- systematic effects due to individual measurement devices and retrieval procedures (h, k), including large-scale systematic effects due to instrument calibration or harmonization;

- random measurement and retrieval errors; and

- differences in the representativeness of different data sets (again including random and systematic components).

Advanced Validation Approaches and Strategies - Spectral Methods

- Several studies in different communities have demonstrated approaches to apply spectral-based methods as validation techniques over a broad range of data sources and timescales.

Spectral Methods - Fourier-Based Approaches

- These approach finds expression in the standard Fourier transform, which resolves the frequencies underlying the signal of interest.

- A mathematical description of the mean-square error (MSE) as an index of accuracy in estimating the ground measurement (point gauges) using spaceborne measurements.

- Another approach detects characteristic features of erroneous SM estimates fromEO data in the frequency domain.

- Results indicate that higher retrieval error standard deviations go along with higher vegetation/leaf area index, rainfall, and SM.

Spectral Methods - Consistency Through Process Models

- Process models reflect our understanding of the relevant processes governing the system under observation and of their interaction.

- They can ensure properties such as conservation of mass, energy, or momentum.

- Such models can be used in the validation context in various ways.

Spectral Methods - Indirect Validation

- Indirect validation approaches map the observational data product to a secondary variable, which is then compared against reference data of the same geophysical variable.

- The performance of the secondary variable (in comparison with reference data) provides information on the quality of the observational data product

Spectral Methods - The Role of Data Assimilation for EO Validation

- Satellite data plays a key role in current data assimilation systems within numerical weather prediction models.

- The direct assimilation of passive microwave and infrared radiance data has had a particularly large beneficial impact on analysis and forecast quality [Joo et al., 2013].

- Key challenges include the lack of traceable radiometric measurements from on-orbit satellite radiometers and a lack of traceable ground truth observations against which to validate the satellite observations.

Spectral Methods - Assessing Uncertainty From Scale Mismatch

- In the atmospheric range, when measuring temperature, water vapor, or trace gas concentration, reference measurements are usually sparsely distributed in space and time with respect to measurements based on a wider resolution universe.

- This makes it difficult to find strict collocation criteria, magnification methods, or even an estimate of the collocation uncertainty involved in the comparison.

Spectral Methods - Assessing Uncertainty From Scale Mismatch 2

Several strategies have been devised to overcome this issue:

- Taking more than one reference point measurement.

- Estimate the collocation errors (and resulting uncertainties) when comparing single-reference measurements with satellite data using.

- When only a single-reference measurement is made, for example, a radiosonde measurement compared to a satellite-based one, the collocation uncertainties become nonnegligible.

The Ultimate Goal of a Validation

- To assess whether a data set is compliant with predefined benchmarks (requirements) that quantify whether a data set is suitable for a particular purpose.

- It is therefore essential that the metric itself is not ambiguous and allows to fully capture the data quality.

The Ultimate Goal of a Validation

Prerequisites apply for a proper definition of requirements to be used as such validation targets or benchmarks

- A clear specification of the spatial and temporal domains for each metric is essential.

- The mathematical details of the metric calculations have to be provided.

- It needs to be specified under which conditions a data set would be considered to fulfill the specified requirements.

- Clear separation between systematic and random error components is required.

Recommendations for Validation Frameworks

- Refine user requirements.

- Current definitions of user requirements are very often based on scalar metrics.

- The motivation for these scalar metrics needs to be much more traceable.

- Beyondscalar metrics

- Advanced validation methods should gain widespread use.

- These require a thorough understanding of the error characteristics of both the satellite and the reference data.

- Collection of reference data.

- Traceable approaches for the collection of reference data for EO validation are needed.

- The standards developed by the Committee on Earth Observation Satellites working group on calibration and validation define good standards already, but they are not necessarily available for all Essential Climate Variables

Recommendations for Validation Frameworks 2

- Traceable data production.

- Traceable data production chains are required that allow to trace back the method used for the production including full traceability of the satellite and ancillary data used, including their uncertainties.

- Sustained fiducial references.

- The sustained availability of fiducial reference data is not ensured for any of the communitiesworking withEOdata.

- Validation with fiducial references covering the spectrum of the natural variability of a geophysical variable is considered essential for all validation approaches.

- Validation tools.

- Traceable and open source-based tools for the validation of EO data currently do not exist.

Thank you for attention.

All references can be found at: https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017RG000562